1. Project Description:

Project name: “Zenboo”

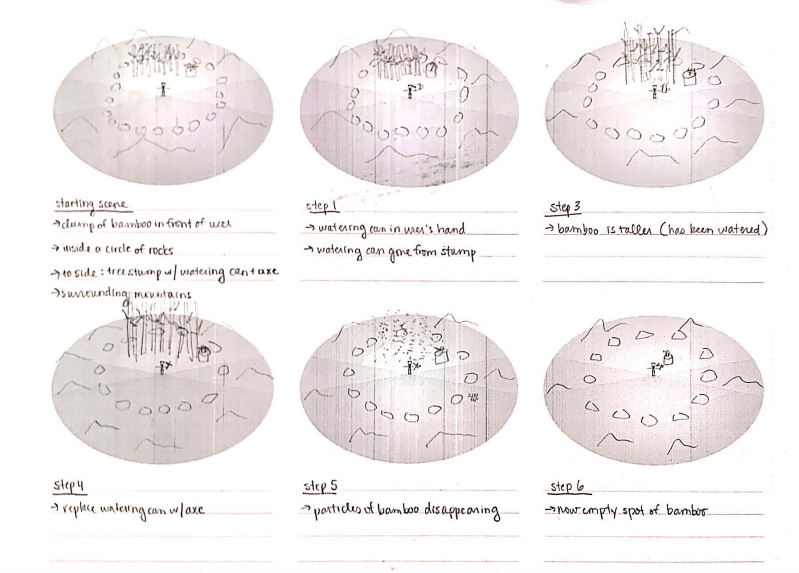

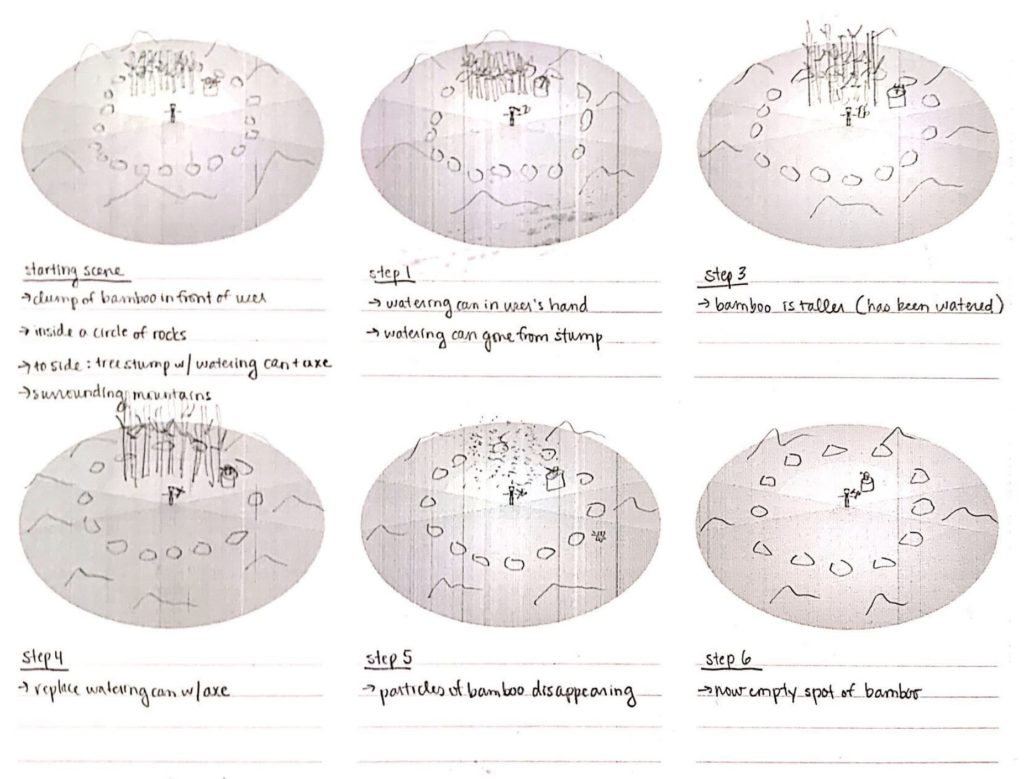

Zenboo is a relaxing space that demonstrates the state of ZEN by giving user relaxing watering&growing&cutting bamboo activities to offer them a chance to interact with mother nature in a fun way. A user will be surrounded by mountains, floating rocks and bamboo clumps, he/she will find tools nearby to give the user hints to initiate some activities with them. In Zenboo, the user is able to water the bamboo using a watering can and watch it grow in a unique way; moreover, he/she can also play with the newly grown bamboo. When picking up the sickle next to the watering can, a user can wave it to the bamboo clump and make the bamboo pieces disappear. If the use water it to much and make bamboo grow too fast, due to the effect of gravity, some piece of bamboo will fall on the ground and disappear after few seconds by themselves as well!

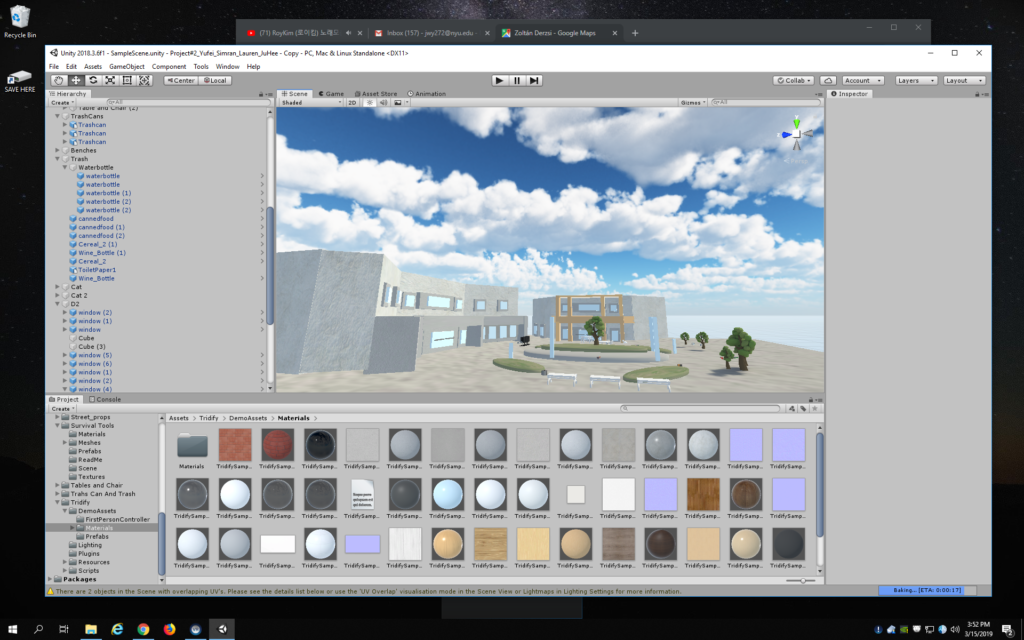

2. Process and Implementation:

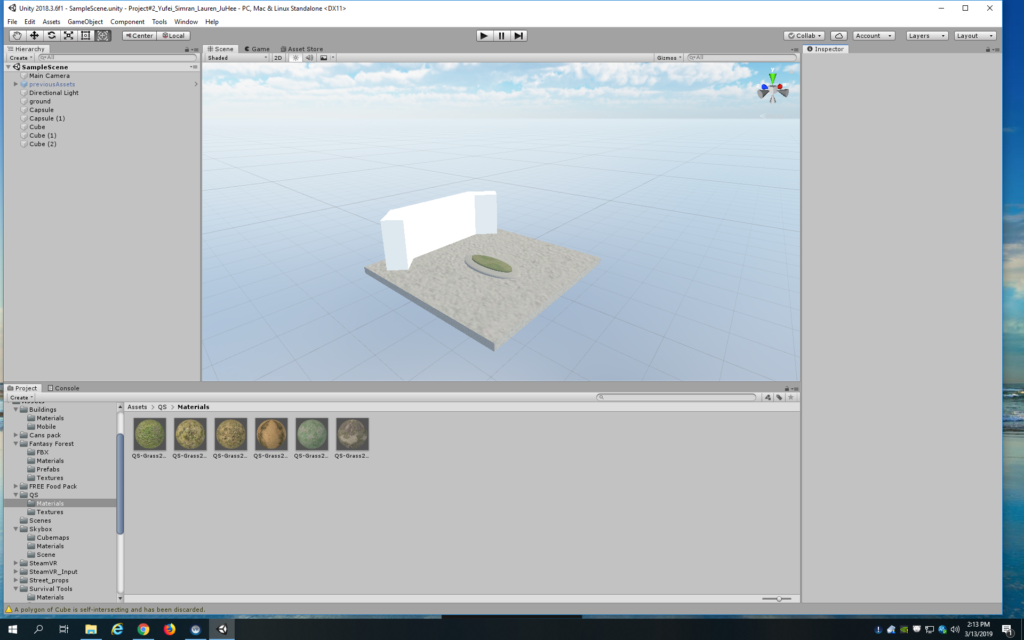

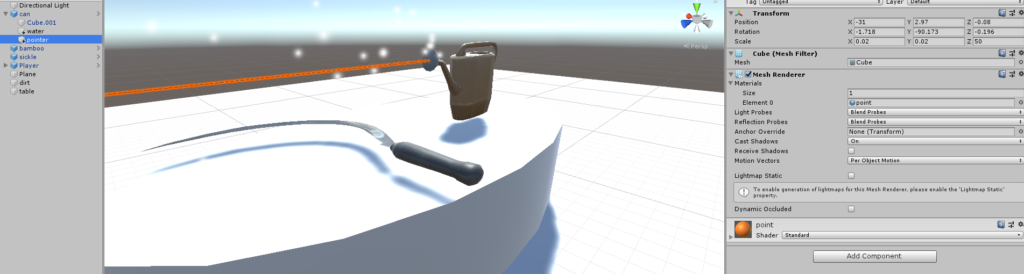

This is how we started:

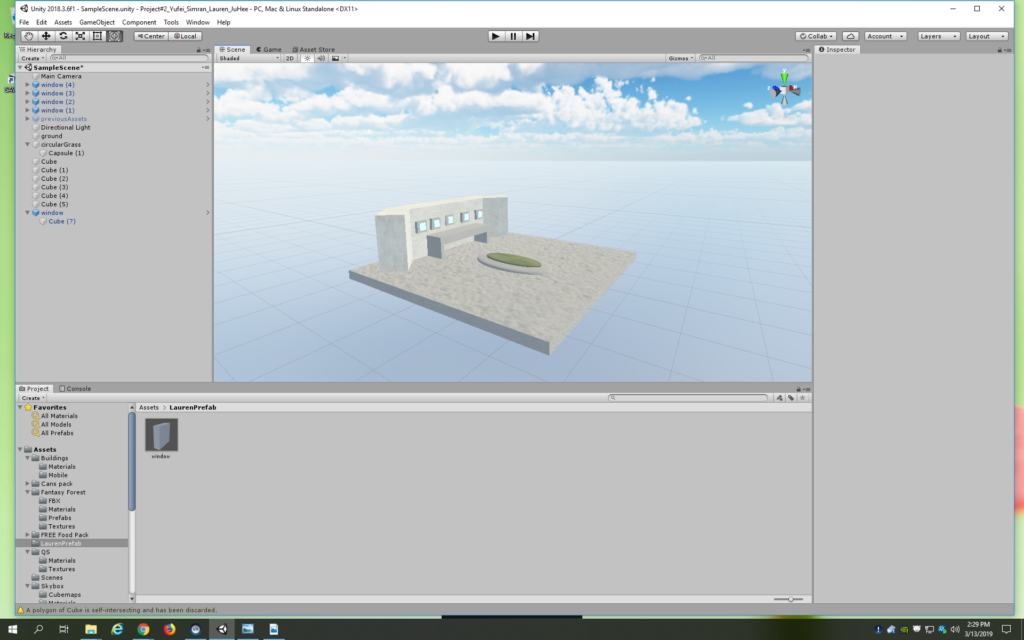

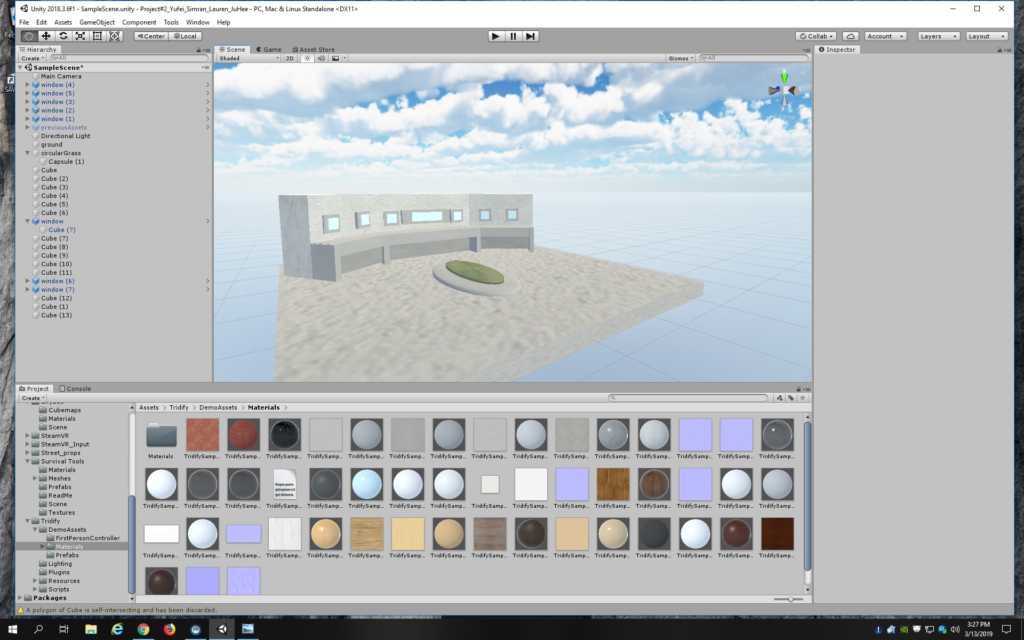

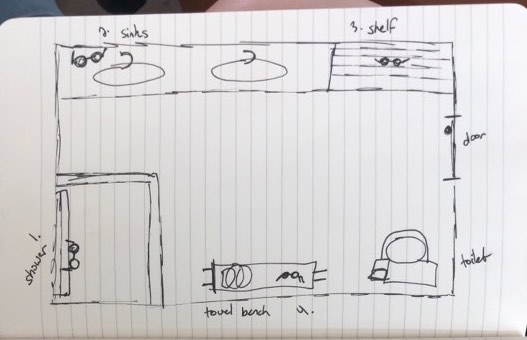

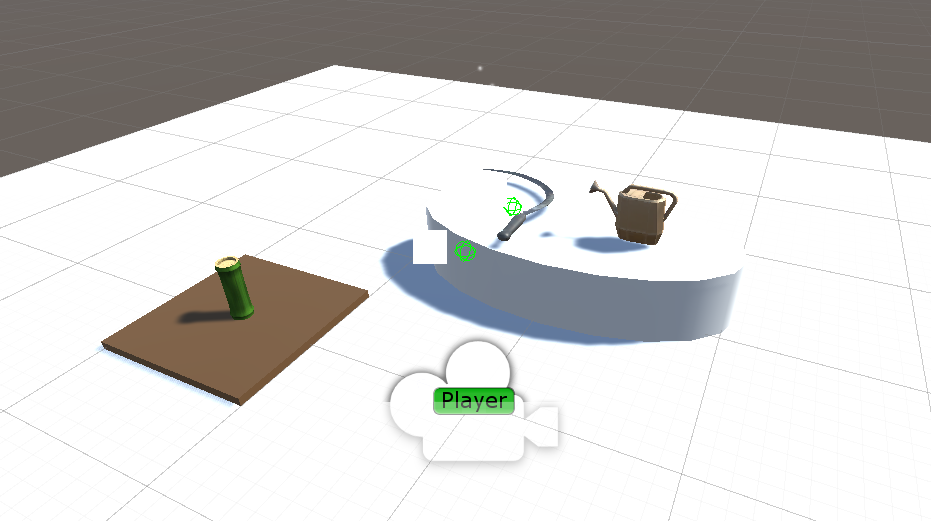

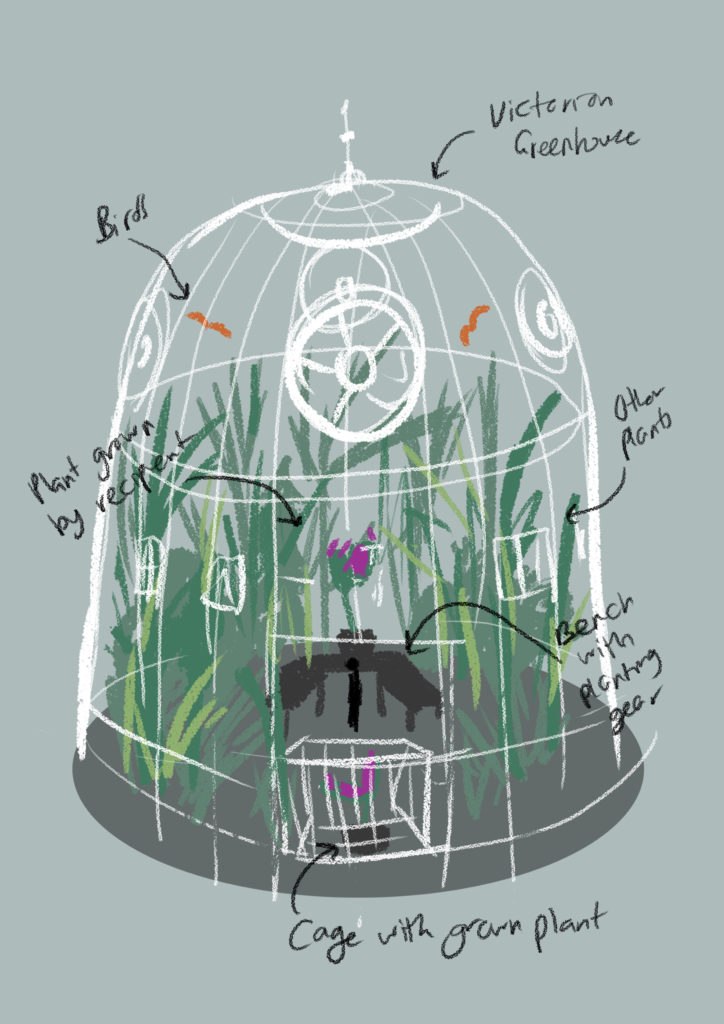

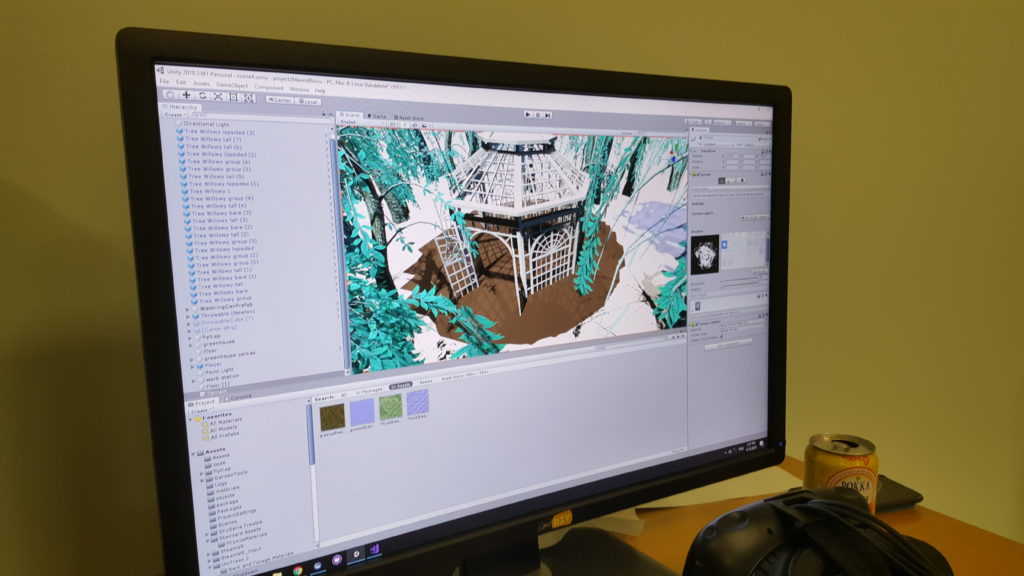

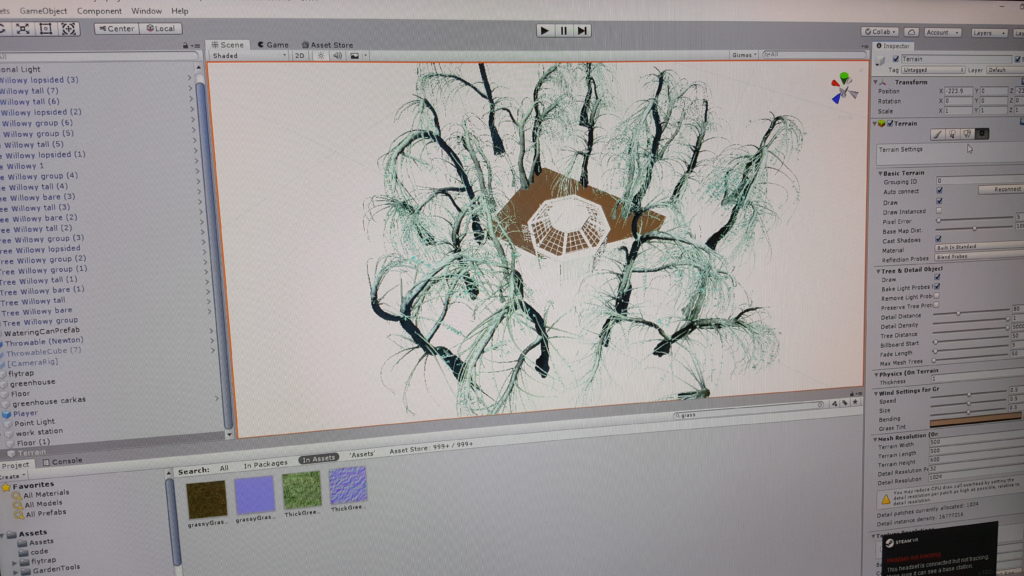

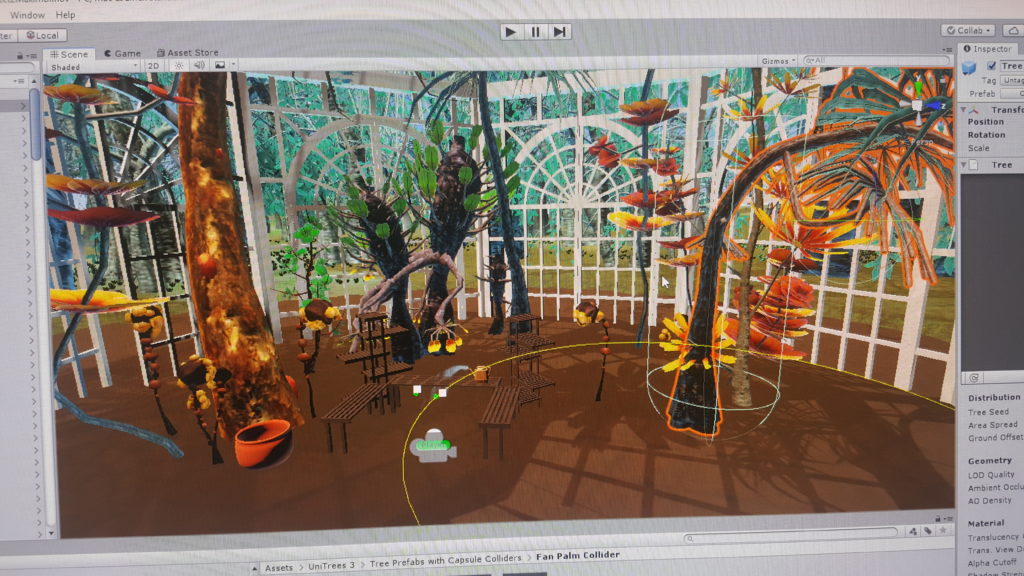

How did we build the scene and the the ideation of designing choices:

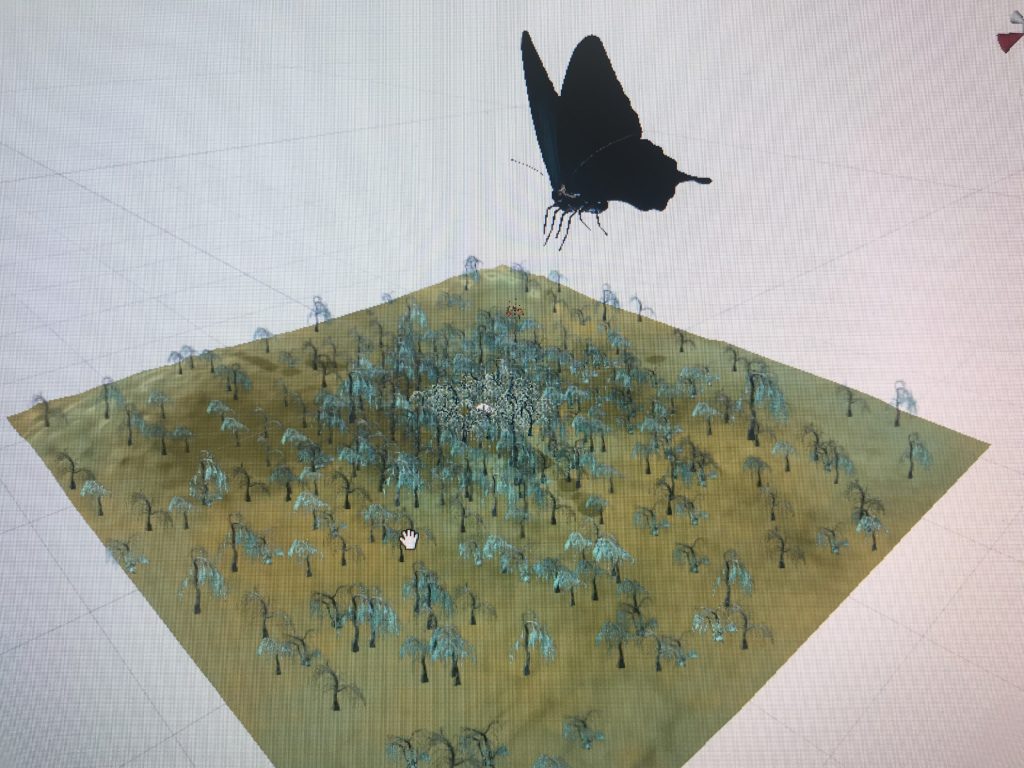

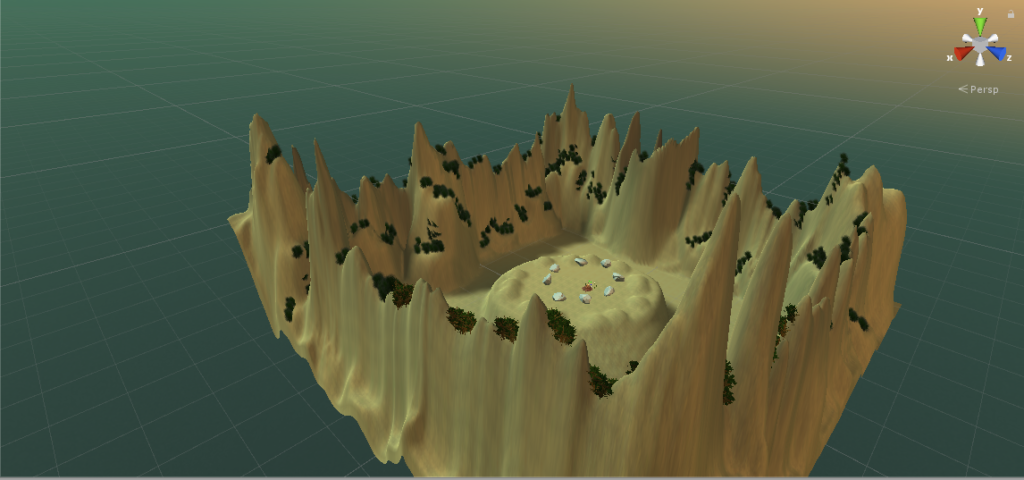

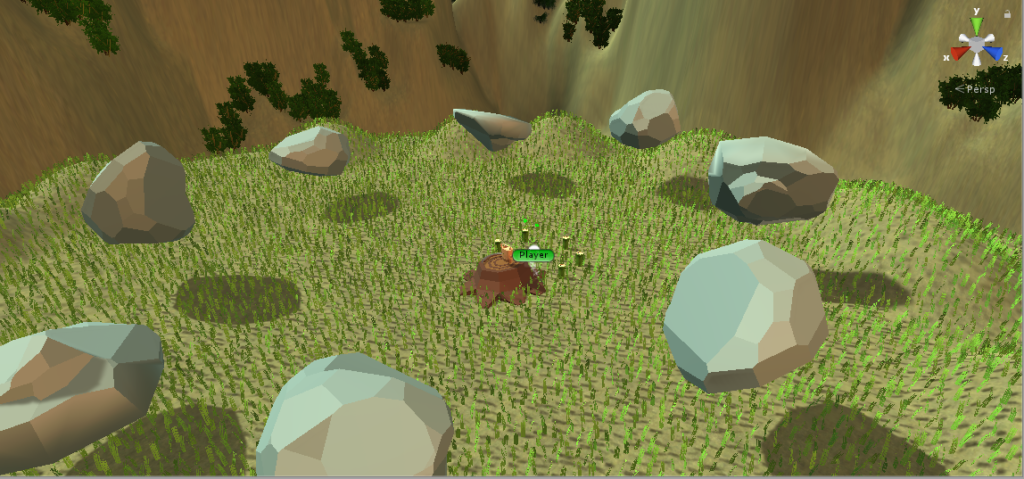

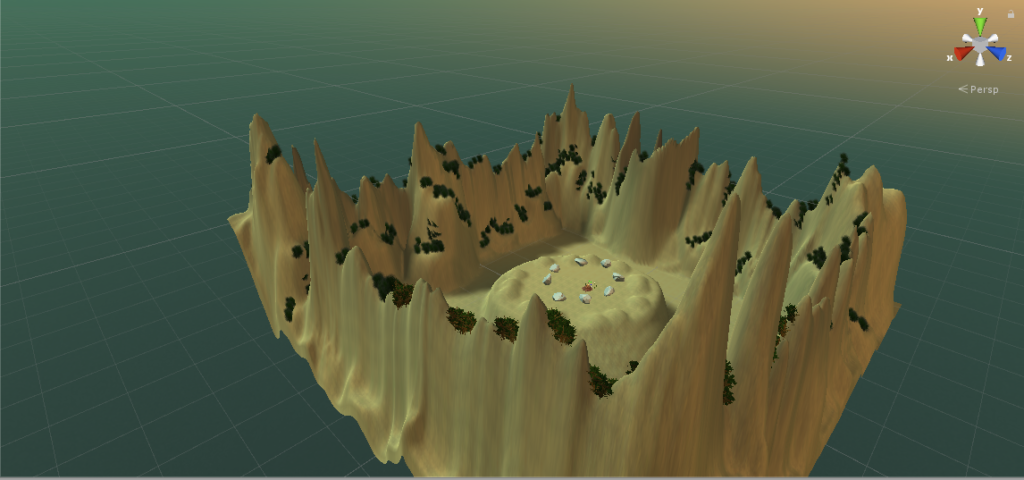

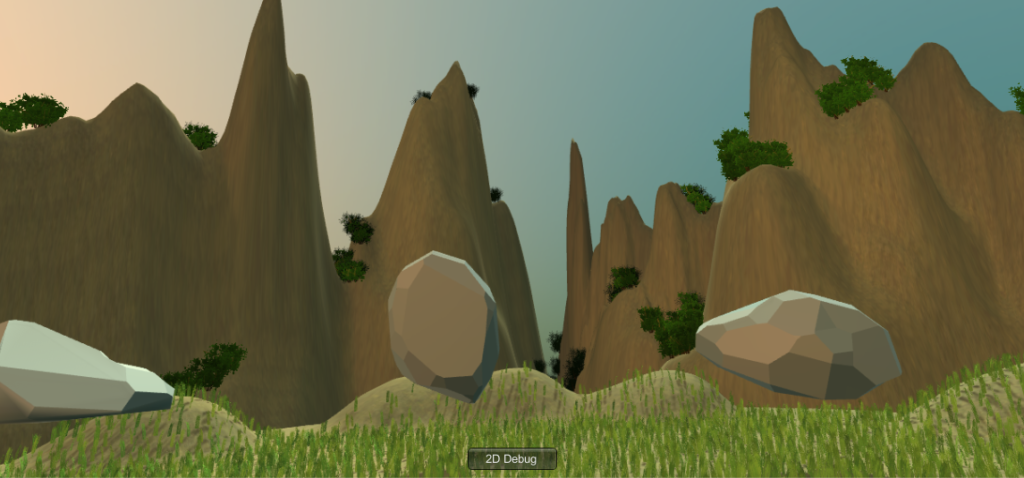

We want to create a scene/space that makes people feel relax in and doesn’t have a lot of things/movement to distract them from feeling the sense of “Zen”, therefore we came up with the idea of having a giant mountainous background with the sunset mode and put the user in the middle, also in order to amplify the interactions between the user and the growing plants experience, we put rocks around where the user is standing to make sure he/she won’t have to move too much to interact with the environment.

The reason for this inspiration is they are very mystical and calming, which would help contribute to the relaxation aspect of Zenboo.

The design of the circle of rocks was decided through the user feedback that the rocks could be larger and floating around the user rather than the initial plan to have them resting on the ground. This turned out to have a pretty cool effect and create the Zen atmosphere.

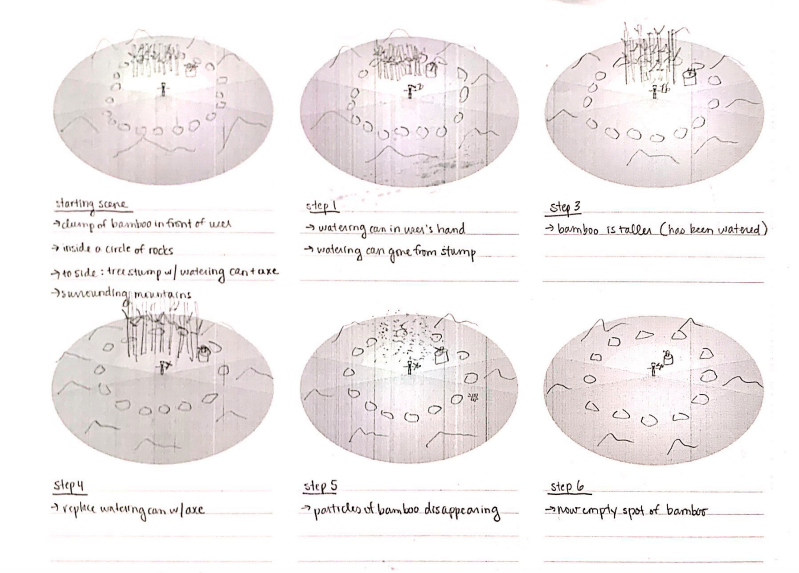

Another design decision that has been changed during the project development was the location of the bamboo. Since space is a limitation, having the clump of bamboo in front of the user all spaced out would have been problematic, or perhaps not as intuitive that the user had to go over to it and water it. Instead, we decided to place the bamboo in a semi-circle close to and around the user. This way, the user does not have to walk very much in order to water all of the bamboo.

What would be the “everyday” thing in this world?

The simple actions/interactions like pick up/drop/throw/watering and cut that everybody already knows what to do the first time they see the scene. And according to out existing knowledge we have the perception that when you water the plant it will grow, when you wave the sickle it will cut the plant. Therefore there’s not a lot of education of how this project works is needed and it’s easy for any user to take it up.

Steps to implement the interactions:(highlight some key interactions I worked on)

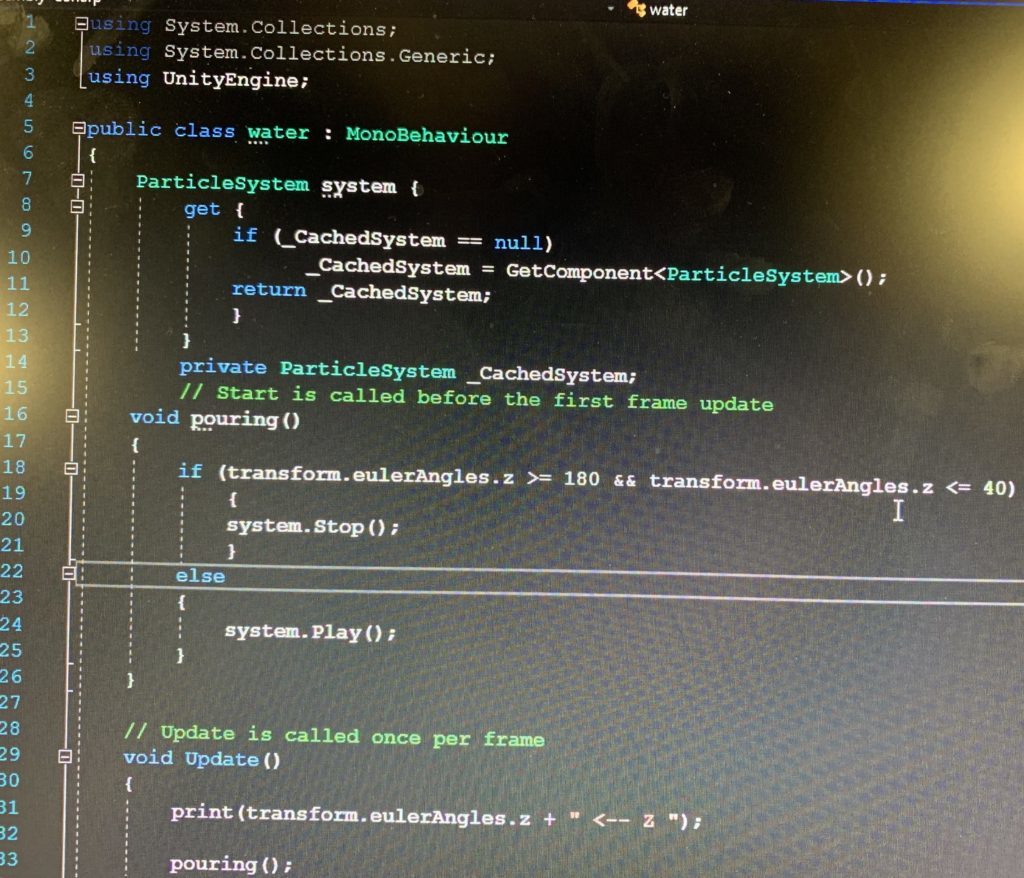

1: Particle system – water:

I worked on the particle system to make it on/off when an object is rotated at the certain angle – when the watering can face downwards the water particle system will be on and when it’s at the normal position the article system is off and the water effect won’t be shown.

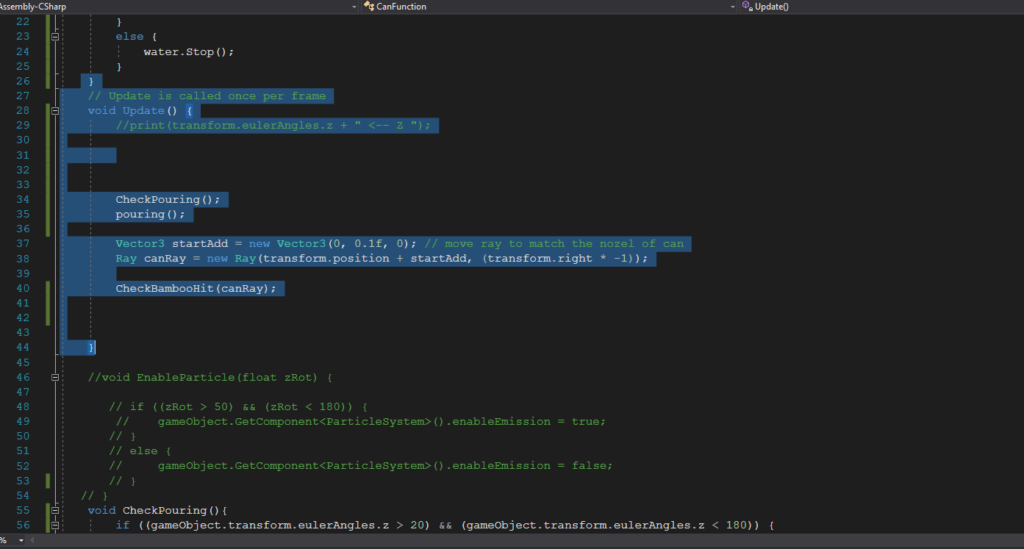

I reached the goal by using the transform.enulerAngles and catching the Z angle input of the water can object. We have a boolean function called “IsPouring”, so I grabbed the particle system under it and I added the code if the angles are beyond the range then the the system stop, else the system play. And we call the function “Is pouring” under the “void update” to make sure it is running all the time.

There was a small problem when I practice the code – the particle system is alway on when it’s playing. So I assumed it was disconnected from its parent object, then I added the code “Print” on the IsPouring function to check if it’s connected to the watering can when the codes are running. It turned out to be that there’s nothing printed out in the console log, so I dragged the the particle system to the water can to make sure it’s under the component section (although the particle system is already a child of the watering can), and then it worked.

2: Particle system – mist:

Another particle system I worked on is the the mist effect and it will only be triggered when the sickle is cutting/colliding with the bamboo.

At first I was thinking about attaching the particle system (the mist) to the bamboo script, so whenever it is detected that the bamboo is colliding with the sickle (the sickle is hitting the bamboo ), the result of which is to destroy a GameObject (a piece of bamboo), the mist particle system will be triggered to play. However, this design has two significant difficulties: one is that OnParticleCollision is really hard to be repositioned in the “instantiate” to make the mist effect only be shown on the specific piece of bamboo that is hit by the sickle (since there will be a lot of bamboo grow out of the OG bamboo); another difficulty is that since at the same time the game object will be destroyed will the child function on it has been trigger, they effect will not be shown at all because the moment it’s triggered, its parent also dies so the mist has nothing to show on.

Taking these conditions into consideration, I tried to created a new mist script just for the sickle and it’s separated from the bamboo function so we don’t have to reposition the particle system for each specific bamboo. At first I tried to detect the dillion of the particle system by “OnParticleCollision”, however it turns out to be super hard to be detected accurately since there are millions of small particles and it almost collide with everything. Therefore I switched to detect the collusion of the sickle – once the collision of the sickle hits a game object, the particle system (mist) that is attached to be sickled will be triggered.

3:Bamboo Grow when been pointed by particle system

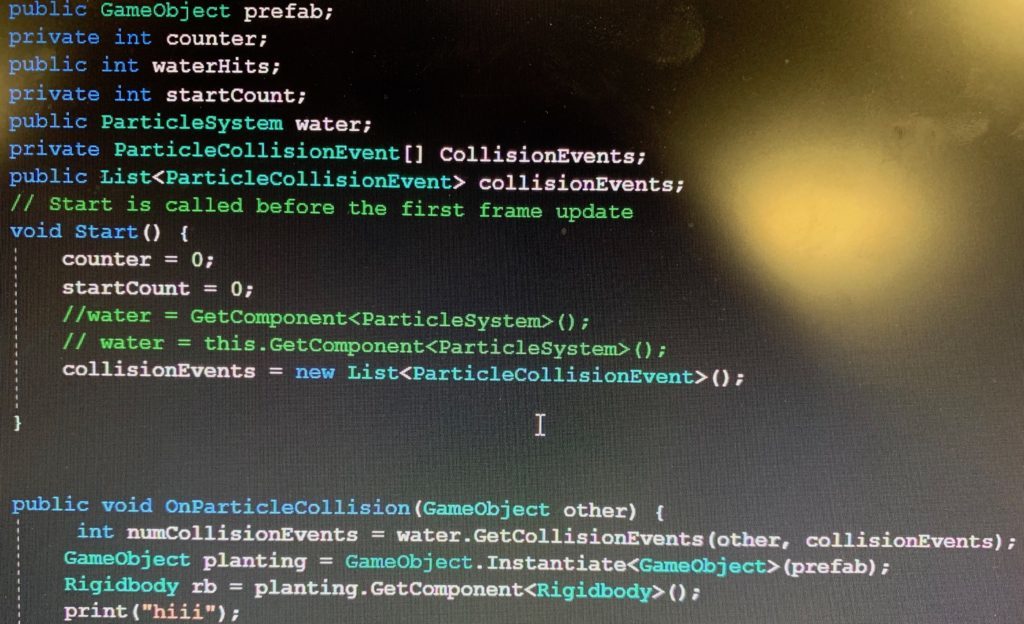

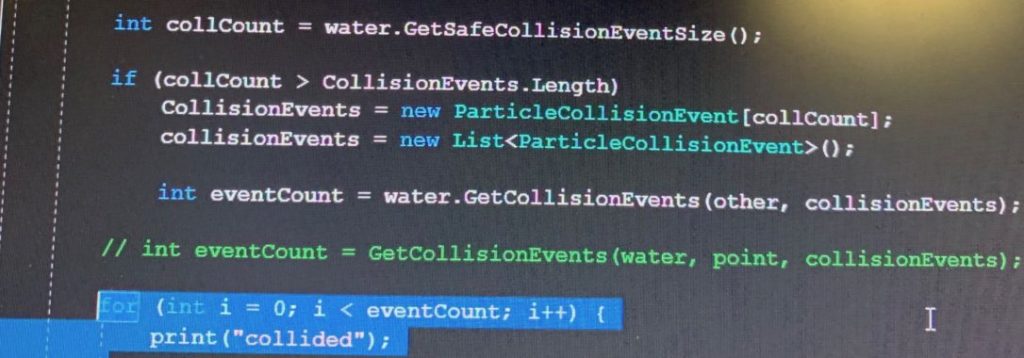

We finished this part by using OnParticleCollision to detect the collision between the bamboo and the particle system that has been attached to the watering can. In the beginning we decided to add the particle system collision detect in the Bamboo OG script, because the growing function is in the same script so it’ll be easier to call, however, even been put into different layers and been set as “only collide with bamboo”, the particle system will literally collide with everything. Then we tried to only write the particle collision detection code in the canfunction and call the bamboo grow function from a different script to make sure the two parts are not messed up with each other. So basically in the particle system we say once it’s collide with bamboo, then it triggers the grow function from the BambooOG script, and then it worked. The codes we uses are shown below:

4: Floating effect of rocks:

In order to improve the user experience and create the sense of ZEN, I added the floating effect:

3. Reflection/Evaluation: This should discuss your expectations and goals in the context of the what you felt was achieved with the finished piece.

I think we as a team successfully achieved our initial goals and expectations of this project – that we have a beautiful Zen environment, we built the growing and cutting bamboo interactions, and surprisingly during the process of coding bamboo functions we found a cool way to let the bamboo has the same X and Z position, so even if the user play around the bamboo/lift it up/touch it, the bamboos will come back to the same position. We also achieved our initial thought of limiting the user’s movement – by putting the semi-circle bamboos in front of the users. For the coding part I was involved in particularly, I reached the initial expectation to make the particle systems, trigger the bamboo grow function when it is collides with the water particle system, create the floating rocks effect and create the mist effect when the sickle collides with other game objects.

However, there are something that needs to be reconsidered/improved in this project. In out initial thought, we design the circle of rocks to limit the user’s movement, but it turns out to be in the real VR scene those rocks just become a part of the distant backgrounds that didn’t really work to limit the user’s movement. What’s more, I think there should be an ending of our project – because what we have now is just to endlessly water and cut the bamboos, it should stop or have something else to show at some point. The parts that I could potentially work more on includes making the collision detection more discreet – for example, the mist particle system will only be triggered when specifically collides with bamboo, not with any game objects.