Our initial idea was to tell the story of the development of the UAE, based on the theme “renewal”. I took a core class last semester called the history and environment of the Middle East, and I was shocked by the fact that this region used to have oasis in ancient times. Thus, we thought it would be interesting to see how this places changed from oasis to desert, and then from desert to such a modernized city with skyscrapers and trees planted along the roads. Our idea was to position our story on an isolated island, which represents the UAE, because recreating the country will be challenging.

Then we thought that it would be too complicated to tell the history of the UAE from the beginning, and we failed to figure out how to make the transitions between the three scenes – oasis, desert, and modern city – natural and intuitive, so we decided to focus on the last two stages: how the UAE developed from desert to a modernized city. After some research, we identified three key stages for economic growth in the UAE, and we listed the elements that should appear in each stage:

Stage #1: desert, tent, barren, camel, cactus, pearl picking

Stage #2: souq, discovery of oil, the combination of seven emirates

Stage #3: Asian cup, skyscraper, NYU, city park, luxury car

In terms of transitions between scenes and the experience of time in our scene, player will be an old man who has lived in the UAE for his entire life. Through the player’s interactions and conversations with people around him in the three stages, player gets a sense of his/her age. For example, in stage #1, the player will see his parents collecting pearls, in stage #2, the player will trade with other people in their mid-age, and in stage #3, the player lives happily with his grandchildren.

However, we still think that the elements we would like to include in our environment and the player are loosely connected: player can actually see the history of UAE from a third person’s view, then why are we designing an VR experience for users to interact with the environment? Following that question, we tried to narrow the scope of the whole story down: instead of telling the story of the whole country, we wanted to focus on what this old man experienced during his life. The experience will start with the player lying in bed in his own luxury bedroom, and we designed three interactions based on the previously mentioned three stages:

Interaction #1 Player approaching the window. When he looks outside the window, he will see the modern city (stage #3)

Interaction #2 Player approaching the wall, touching on the photo hanging on the wall, triggering his midlife memory (stage #2)

Interaction #3 Player approaching a desk, on top of which there’s a hand-made toy. Player picking up the toy, remembering how his mother made him the toy when they lived in tribes (stage #1)

Then we realized another issue with this design: after player enters stage #2 for example, how do they come back to the main scene (the bedroom)? We wanted to avoid using back buttons because we want the experience to be more coherent. Bearing that question in mind, we tried to come up with interactions that could actually push the development of the story, and make the transition between stages more fluent. Then we thought that we could set our environment at the corniche. Player could collect pearls at the beach, and as they collect more pearls, the environment changes: more people gather together, and the souq forms gradually. Then the player can trade with other people, and as more people are trading, the skyscrapers occurs. However, a huge problem with this idea is that the rapid development of the country within the past several decades is due to the discovery of oil, yet we could not come up with a way to assign player a role in the discovery of oil.

At this point, Vivian and I felt like we were trapped by the huge idea of presenting the history of the UAE, and we realized that this is an impossible task considering the number of factors involved in the story. We decided to start over and look at the other two themes.

I had another idea of simulating how people feel after taking hallucinogen, the effects of which has been at the center of debate for decades. Meanwhile, Vivian found this interesting projects, which is a 3D data visualization of the brain activities for 4 seconds of someone who fell in love. We thought it would be interesting to create a multi-sensory experience for love and betray. We decided to name our project Betray, which will be a musical bittersweet love story conveyed by beats, background music and key words that follow the story development timeline.

Our environment will be quite illusionary. We will create an endless world, as if players are floating in the universe, but we will also distort the color of the background, so that the environment looks unfamiliar. There will be a list of words associated with love & betray (maybe also trust?), which appears in order. There will also be a background music. Players can interact with the words. By clicking the trigger/throwing the word away/etc., player can trigger different sound effects and visual effects. There will be a certain level of randomness in the experience, but the certain properties of the effects such as the volume & pitch of the sound effects, and the movement of the visual objects will be decided by how player interact with the word, and the category of the word they interact with. For example, if they interact with a word associated with love, the sound and visual effect will be soft, and calming, while if they interact with a word associated with betray, the sound and visual effect will be harsh and intense.

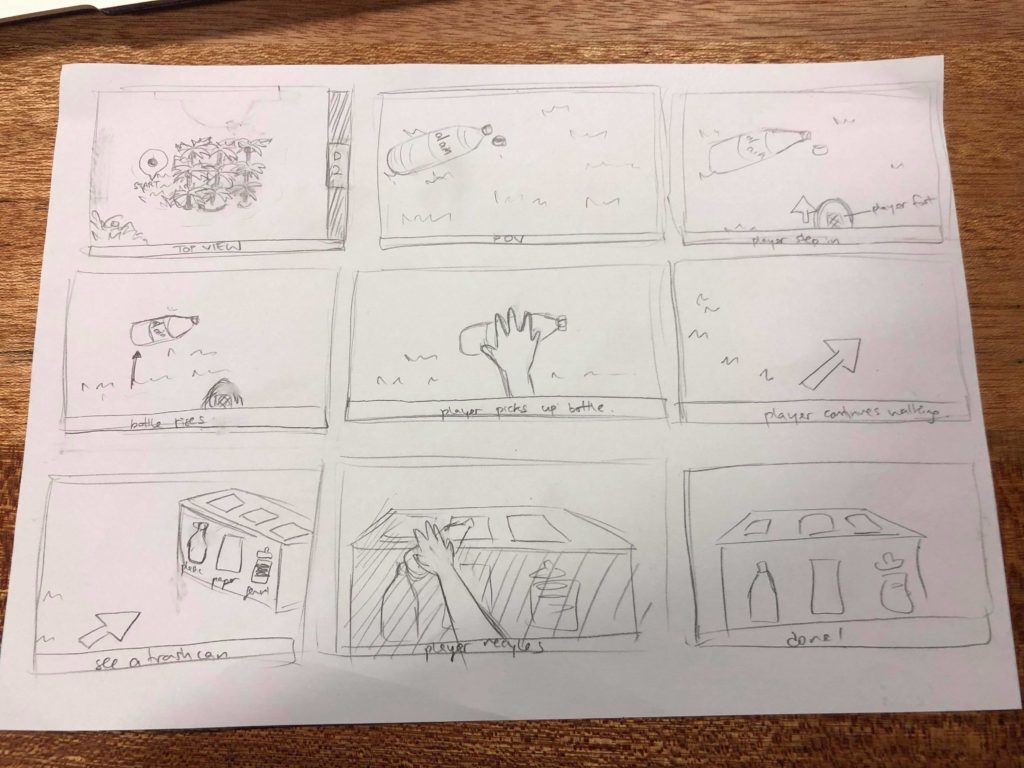

See Vivian’s blog post for our storyboard.

I made a demo using p5.js for the visual effects of our project. However, after presenting it to the class, I realized that I focused too much on how our project looks but ignored the story behind that. What is the message we try to convey to users by having sound and visual effects? I also shared an alternative I have about creating a virtual version of the Museum of Broken Relationships, where users can play around with objects in the environment, and figure out the story behind each object. Sarah suggested that the storyline should have the top priority if we want this to be an immersive storytelling.

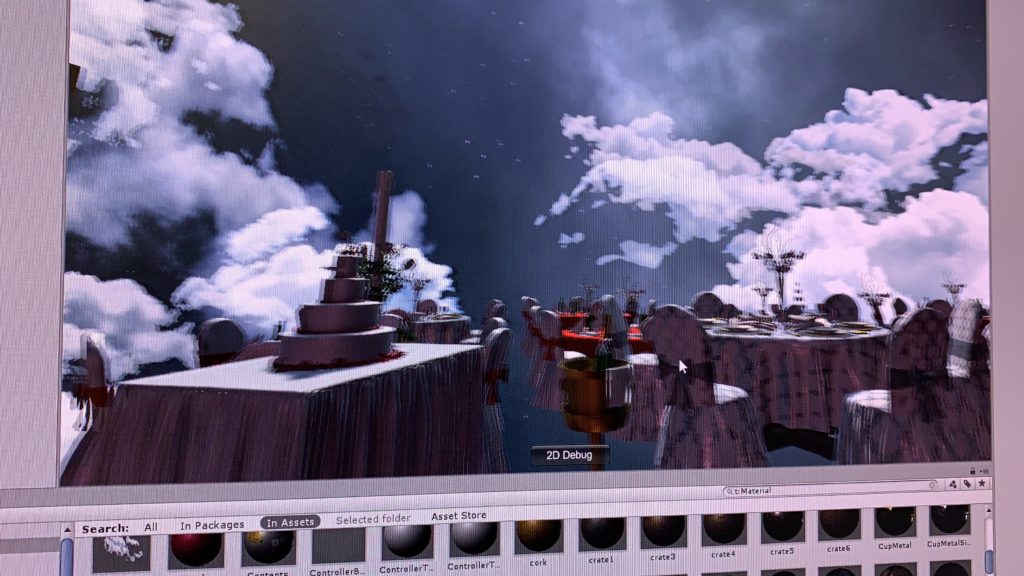

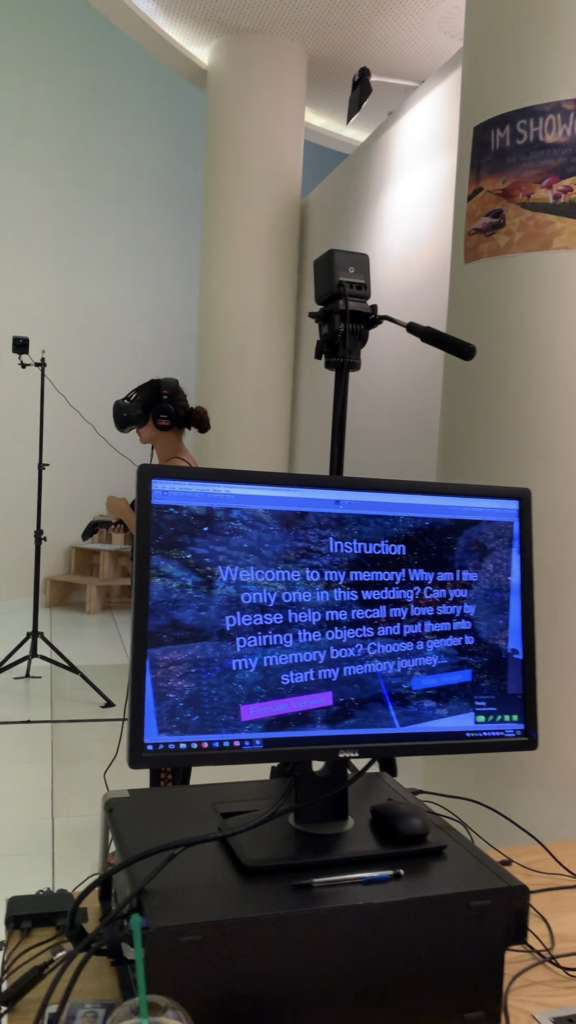

After discussing with Vivian, we came up with the idea of having multiple objects and a cardboard box in the environment. By pairing the objects up and putting them into the box, users can trigger the voiceover of one part of the story. The order in which users put the objects into the box does not matter, since we wanted to leave enough space of imagination and free interpretation of the story to the user. Also, we decided to have a female and a male version of the story, because we believe in gender differences in the understanding and perception of love. Users will be able to choose whether to enter the female or the male version of the story.

Here is our story script:

Male Version:

Movie Ticket & Popcorn Box: I finally got the courage to invite her out for movie. Touched her hand when we tried to reach the popcorn at the same time

Paired Toothbrush & Cup: The first night she slept over, she brought her toothbrush with her. The next morning, she changed my toothbrush into a paired one

Dog Food & Dog Cage: She always wanted a pet dog. I brought home a cute puppy one day, and I couldn’t forget how excited she was: her dream finally came true.

Iron & Suit: First day of work after my promotion. She ironed my suit for me. Looking forward to our future.

Ring & Ring Case: I saved all my money for this ring, prepared for a month for this. Today is finally the day.

Passport & flight ticket: Leaving the country tomorrow. I guess I will have to put this memory in the box and leave it behind…

Female Version:

Movie Ticket & Popcorn Box: He finally asked me to hang out. I’ve been waiting for this for a month. He’s so shy but so cute .

Paired Toothbrush & Cup: I was like a kid after I stayed at his place. I paired our toothbrush up.

Dog Food & Dog Cage: Suddenly one day, he brought home a cute puppy. I was so surprised! I always wanted a pet. I guess now we are a family of three.

Iron & Suit: I want to make his first day after promotion special, so I got up early to iron his suit. Looking forward to the bright future of ours

Ring & Ring Case: I said yes.

Passport & flight ticket: His work left us no choice but to lock all these memories up, forever.

I was a bit worried about not being able to find prefabs for passport, flight ticket, movie ticket, ring, and ring case online, and when we looked into the asset store, we really couldn’t find those. So we changed our storyline a bit, based on what we were able to find online.

New Version:

Male Version:

Paired Toothbrush & Cup: Paired toothbrush is the first step to show we are a family, says her.

Candles & Plates:

I finally got the courage to invite her out for a dinner.

Candle light on her cheeks is the cutest thing ever.

Dog Food & Dog Cage: I brought home a new family member that day, and I couldn’t forget how excited she was: her dream finally came true.

Iron & Iron Board:

First day of work after my promotion.

She ironed my suit in the morning.

Looking forward to our bright future.

Pen & Notebook:

She used to keep all of our memories in this notebook …

but it’s meaningless now.

Vast & Watering can:

Roses die. So are our promises….

Eventually it turns out to be a wedding without her…

Female Version:

Paired Toothbrush : Pairing our toothbrush makes us look like a family more…

Dog Food & Dog Cage: That day he brought home a cute puppy. I was so surprised! I always wanted a pet. I guess now we are a family of three.

Iron & Iron Board: I want to make his first day after promotion special, so I got up early to iron his suit. Looking forward to our bright future …

Candles and Plate: Can’t ask for anything better than a candlelight dinner for the first date.

Pen & Notebook: It’s been a while since he left me… i used to keep a diary everyday when we were together …. How stupid i was.

Vast & Watering can: Roses die. So are our promises…. Eventually it turns out to be a wedding without him…