For our group project, Shenuka and I worked on Galaxyze, an open-ended VR environment that allows the following interaction/engagement:

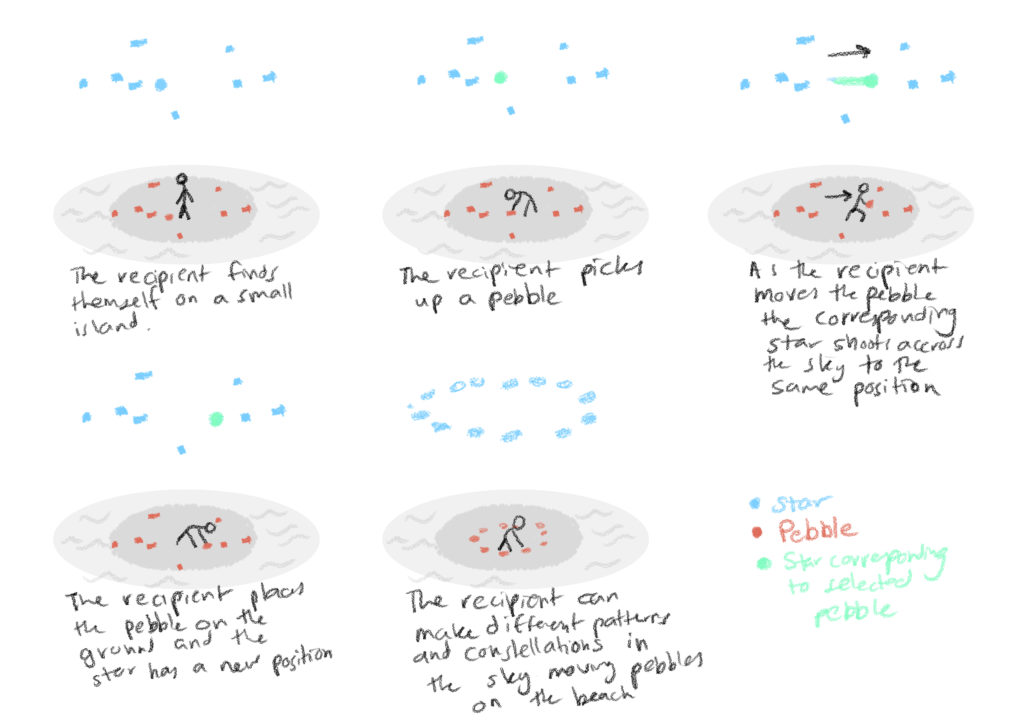

- user controls the stars in the night sky by rearranging pebbles on a beach.

- user is able to impact on a cosmic level with a trivial action.

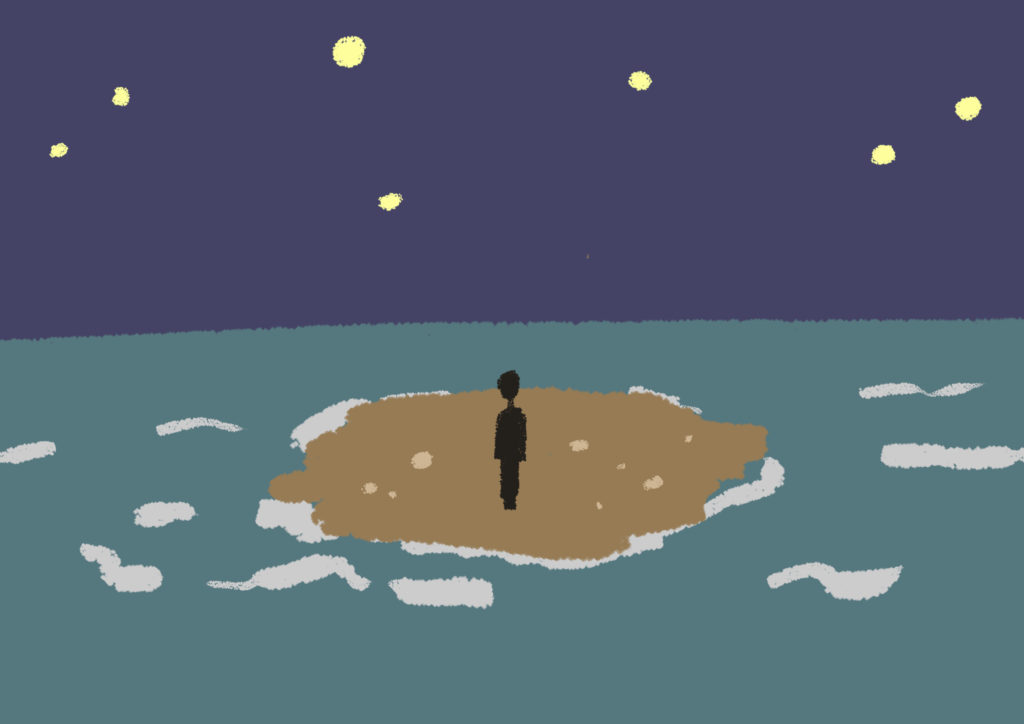

The goal for this project is to let the user become a creator in a fictional world that is also quite relatable, in the sense that it’s a deserted island surrounded by the sea (much like Saadiyat bubble). The user can draw patterns in the sky with trails and constellations, feeling powerful but also isolated by their limited mobility in the world.

This idea first arose from a chapter from Italo Calvino’s Invisible Cities and we wanted to explore the “stuff of story” – about makes a story, the lack of a narrative and he open-endedness of it.

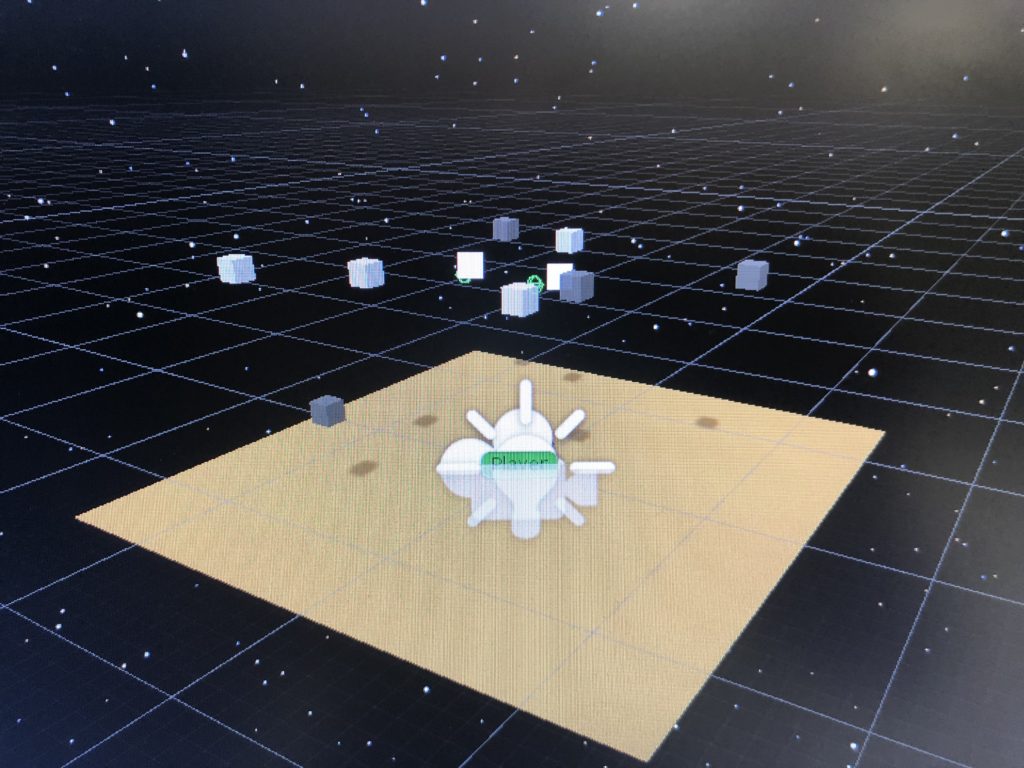

The environment consists of the following, based on the idea that the user must feel small in the vastness of the cosmos above and the ocean below.

- Island: Terrain tool

- Pebbles/ Water: Unity Asset Packs

- Stars: Spheres with glowing material

- Sky: Night sky skybox

- Trails: Particle systems set to fade with time

The scripts we wrote are the following:

- Mapping the position of the stones (x and z values) to the positions of the stars.

- Detecting when all the stones have been thrown in order to play the ending animation.

- Switching from the ambient music to the ending music.

Looking back, our project came out differently from how we imagined it in the first place. We were able to complete this project thanks to multiple player testing, experiments, and feedback from peers and Sarah.

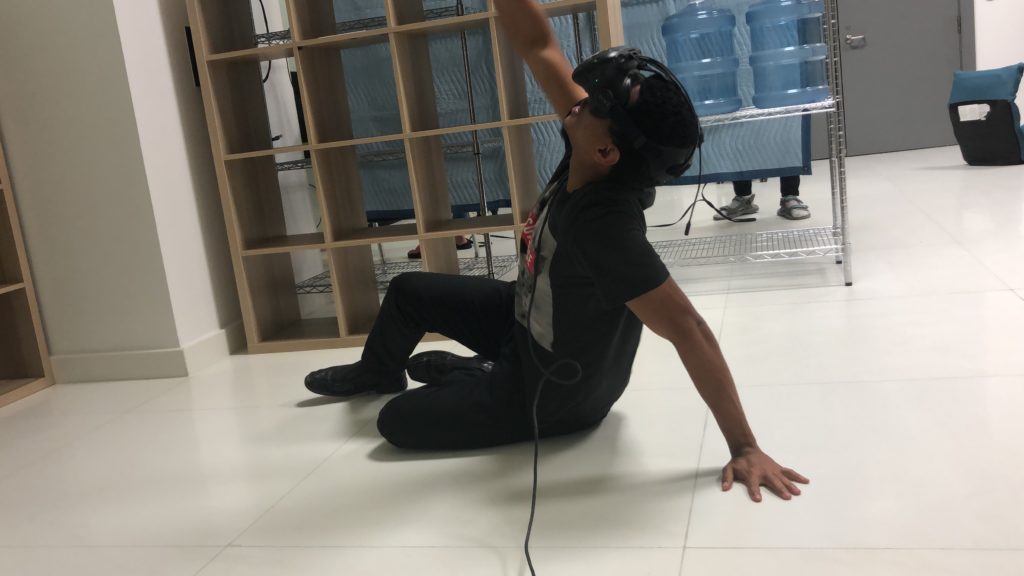

Here’s a footage of Craig testing it out at the IM Show exhibition (mute sound, a little noisy):

Some challenges we faced were mainly in writing the scripts because it was the first time for both of us to use C#. However, after ample research, late-night experiments, crazy amount of logs, tests, and help from friends, we were able to solve them all!

If we had more time, I think we could work more on the environment and making it feel more alive. This could involve adding:

- splashing noises and wave sounds

- dolphins jumping out of the water from afar

- more stars in the sky

- shooting stars here and there

- different types of assets to pick up – not just pebbles, but also shells and other broken/abandoned remnants that we find on the beach these days. I think this could add more to the backstory and give more contextual evidence as to where they are