Project Description

For project #2, our group decided to build an experience that tackles the problem of sustainability on campus. We wanted to base our scene on campus, and there are trash on the ground. In the real world, if someone passes by trash, and ignores it, there are no consequences. Besides, people all have the mind set that someone else will act on that. We wanted to raise people’s awareness of the NYUAD community by creating an alternate reality where if people walks by a piece of trash without picking it up, they will receive negative feedback indicating that they are not acting in a proper way.

Besides, because of the diversity of the community, there isn’t a shared standard for recycling which everyone agrees upon. Always having been such an ignorant person about the environment, I really get confused when I throw an empty Leban bottle: should I put it in general waste or plastics? The bottle is definitely recyclable, but only after I clean the bottle. Recycling can be extremely complicated: I still remember that I was extremely shocked when the RA told us that we should recycle the lid for Starbucks cups but throw the paper cup into general waste. By creating an educational environment which mimics what actually happens on campus, we hope to teach people how to recycle in an entertaining way. Through the repeated interaction within our scene, users might be able to perceive recycling as less burdensome as they get more familiar with it.

Process and Implementation

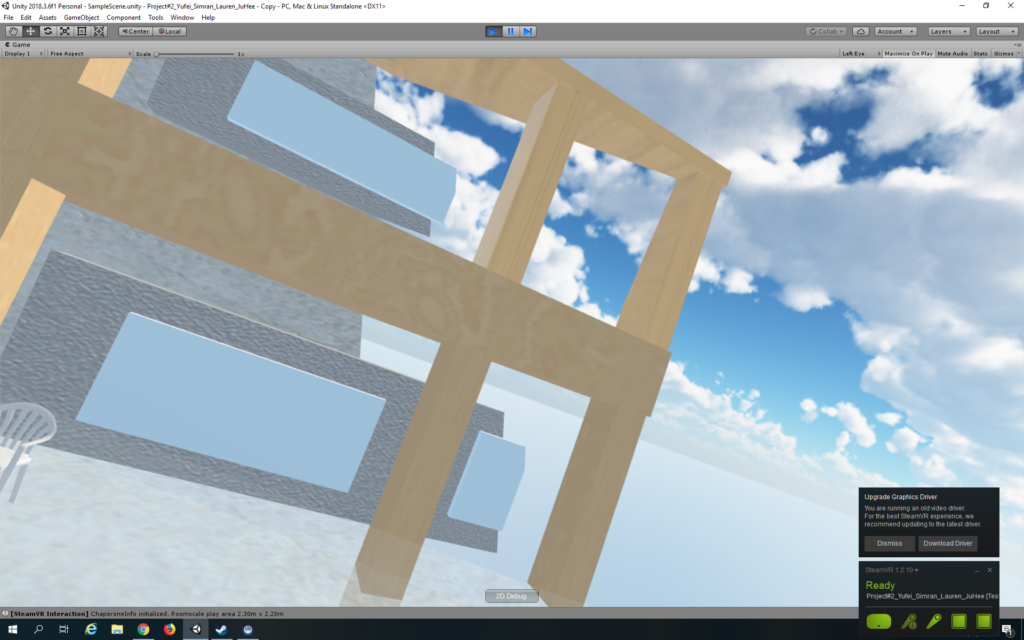

The tasks were divided up: Ju Hee and Lauren were in charge of the environment, while Simran and I were in charge of the interaction. After the environment was created, our scene looked like this:

When Simran and I started to work on the interaction with trash in our environment, we found a lot of problems with the environment. First, because we failed to set up our VR station when we first started the project, we didn’t have a sense of the size of our VR space and how it is reflected in Unity. If I were to figure out we need to set up the VR station beforehand before Lauren and Ju Hee started to build the environment, we could have saved a lot of time and energy from rescaling the space. The environment is too large, so that the movement of users are not significant: users can’t really tell that they are moving inside the environment. So we decided to do a teleporting. We divided our tasks: I will be mainly in charge of the teleporting, and Simran will focus on the interactions, but we are helping each other out throughout the process.

I went through several tutorials to understand how teleport in steamVR works in Unity. Here are the links to the tutorials: https://unity3d.college/2016/04/29/getting-started-steamvr/

https://vincentkok.net/2018/03/20/unity-steamvr-basics-setting-up/

At first, I decided to place teleport points next to each piece of trash, so that users can easily access the piece of trash by aiming at the right teleport point. Then I realized that since we have such a huge space, users would never be able to go to the areas where there is no trash, so I think it would be nice to have the whole space to be teleportable: users should be free to move in our space, and they also have the choice of going directly to the trash and complete the training if they are not interested in experiencing our VR campus.

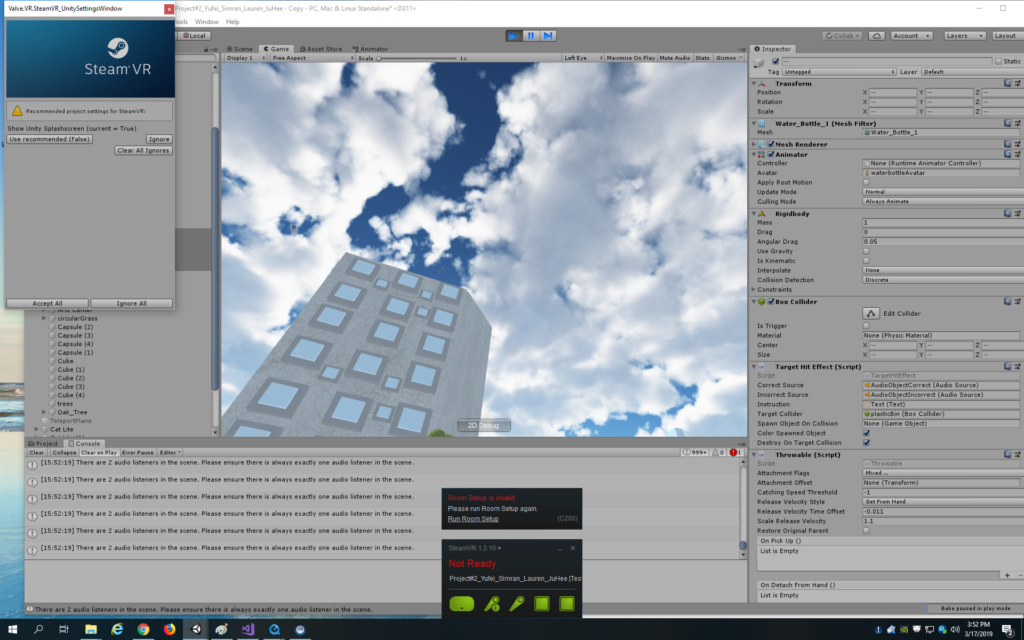

Adding the teleporting object to the scene, setting up the teleport points in the environment, and attaching the TeleportArea script to the ground are easy. However, it becomes frustrating when we have to figure out the scale and the position of our camera. The environment was build in a way that the ground is not set at position (0, 0, 0), and the objects were not tightly attached to the ground. And when we do the teleport, we get teleported beneath the buildings

At first I tried to change the y-position of the camera, so that we actually view everything, but then after raising the camera, we are not able to see our controllers because they are so far away. Then I tried to raise the y-position of the player, but we are still teleported to a place below the ground. Then I figured, instead of making the ground teleportable, I can create a plane that is teleportable, and raise the plane a little bit. By doing that, I fixed the problem.

I also doubled the scale of everything so that the scale looks fine. Then we found several problems when we view the environment via the headset. First, the buildings, or some part of them, disappear when we looking at it.

Then I figured out that the nearest and farthest viewing distance should be adjusted according to the scale of our environment.

Another problem we encountered was how to get close to the trash. Because our scene is in such a huge scale, we cannot even touch the trash lying on the ground because they are so far away, so we decided to have the trash floating in the air, at approximately the same level of the teleport plane, so that users are able to grab them with the controllers. However, if we simply disable the gravity of the trash, they will fly away.

But then if we enable the gravity and kinematics at the same time, the trash won’t be throwable: it couldn’t be dropped into the trash bin. Then I searched online for the correct settings for the Throwable script in steamVR and also asked Vivian how her group did that. In order to make it work properly, we have to set in Regidbody “Use Gravity” to be true, and “Is Kinematic” to be false. Then for the Throwable scripts, we need to select “DetachFromOtherHand”, “ParentToHand” and “TurnOffGravity” for the attachment flags.

I also added the ambience sound to the scene, created the sound objects for positive and negative sound feedbacks, set up the sound script, and attached them properly to each sound object.

Reflection/Evaluation

One of the take-aways from this project is that for VR experience, the scene and the interaction cannot and should not be separated. After dividing the tasks up, Simran and I did not really communicate with Lauren and Ju Hee. Then we took over the already-made environment that was extremely large, and the objects in the scene were kind of off scale. We spent a lot of time fixing the scale of everything, and I felt really bad about not communicating with them beforehand. We could have saved a lot of time.

Another thing I should bear in mind for future projects is that we should never ignore that fact that the hardware might went done. We almost ran out of time when creating the interactions because the sensors kept disconnecting with each other and the controllers kept disappearing from the scene. We should have planned everything ahead rather than leaving everything to the last minute.

Overall, I enjoyed the process of learning from peers and from obstacles, and our project turned out to be nice: we didn’t expect users to be really engaged in our game and to have fun throwing trash.